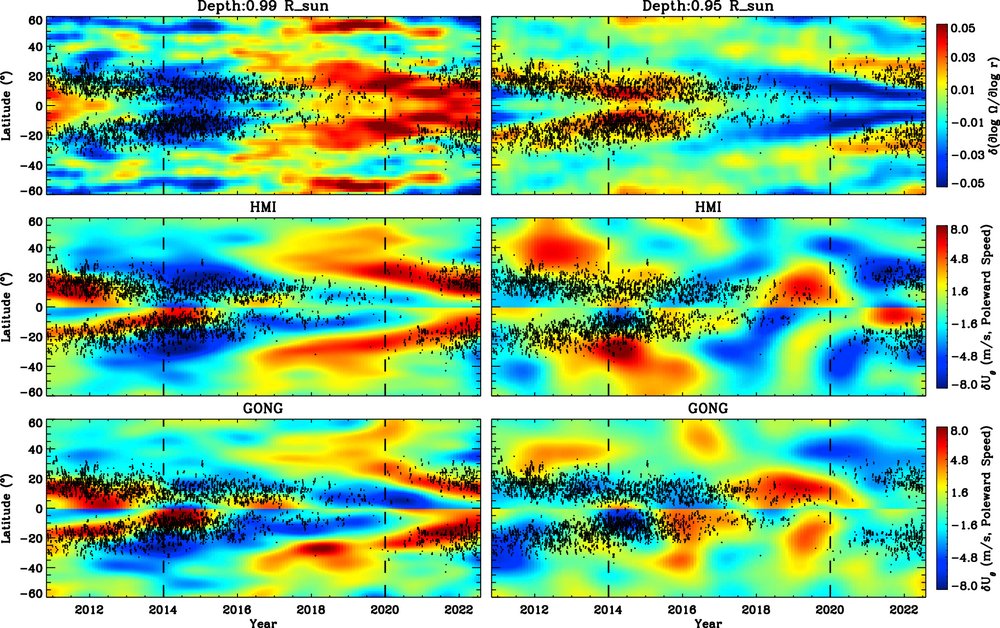

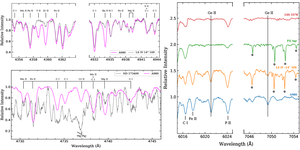

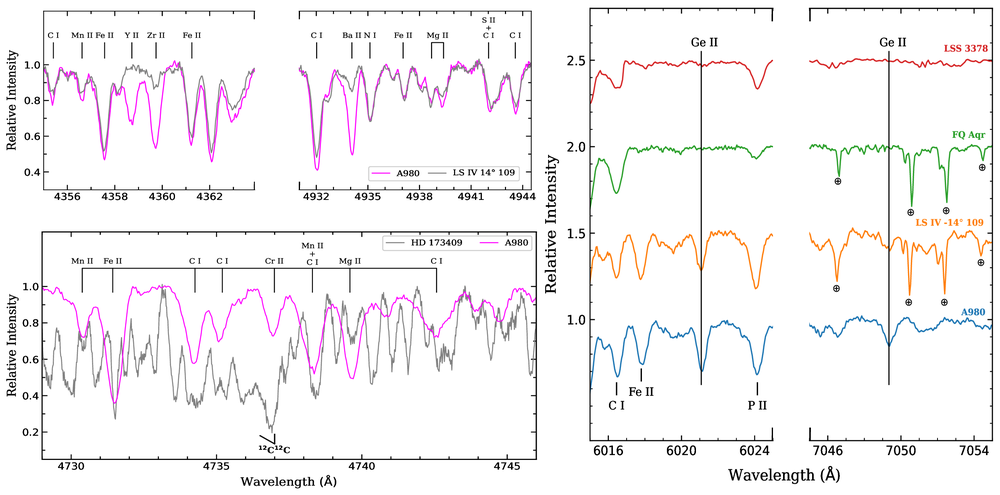

An international team of solar physicists has unveiled new insights into the dynamic "inner weather" of the Sun—plasma currents just beneath its surface that pulse in step with its 11-year sunspot cycle. In a study published on the 22nd of April 2025, in the The Astrophysical Journal Letters, researchers from the Indian Institute of Astrophysics (IIA), Stanford University (USA), and the National Solar Observatory (NSO, USA) have traced how these hidden flows shift over time, potentially reshaping our understanding of how the Sun's interior connects to its outer magnetic behavior, which has far-reaching influence on space weather and Earth.

This is based on the paper titled "Solar Cycle Variations in Meridional Flows and Rotational Shear within the Sun's Near-surface Shear Layer", by Anisha Sen, S. P. Rajaguru, Abhinav Govindan Iyer, Ruizhu Chen, Junwei Zhao, and Shukur Kholikov, 2025, Astrophysical Journal Letters, 984, 1.

Press release by PIB published here.

Press release issued by DST here.

Download the paper from here.